You're Reading Reviews Wrong — What Star Ratings Actually Miss

Your product has a 4.2 on G2. Your competitor has a 4.4. What do you do with that information?

If you're like most marketing teams, you do absolutely nothing. Maybe you put your own rating on your homepage. Maybe you feel vaguely bad that you're 0.2 stars behind. But a 4.2 versus a 4.4 doesn't tell you anything actionable. It doesn't tell you why people love you, why they churn, or what specific thing your competitor does that makes people give them that extra star.

Star ratings are the fast food of customer intelligence. They're easy to consume and completely devoid of nutrition. The actual meal — the part that changes your product roadmap, your messaging, your sales objections playbook — is buried in the text of the reviews themselves.

And almost nobody reads them properly.

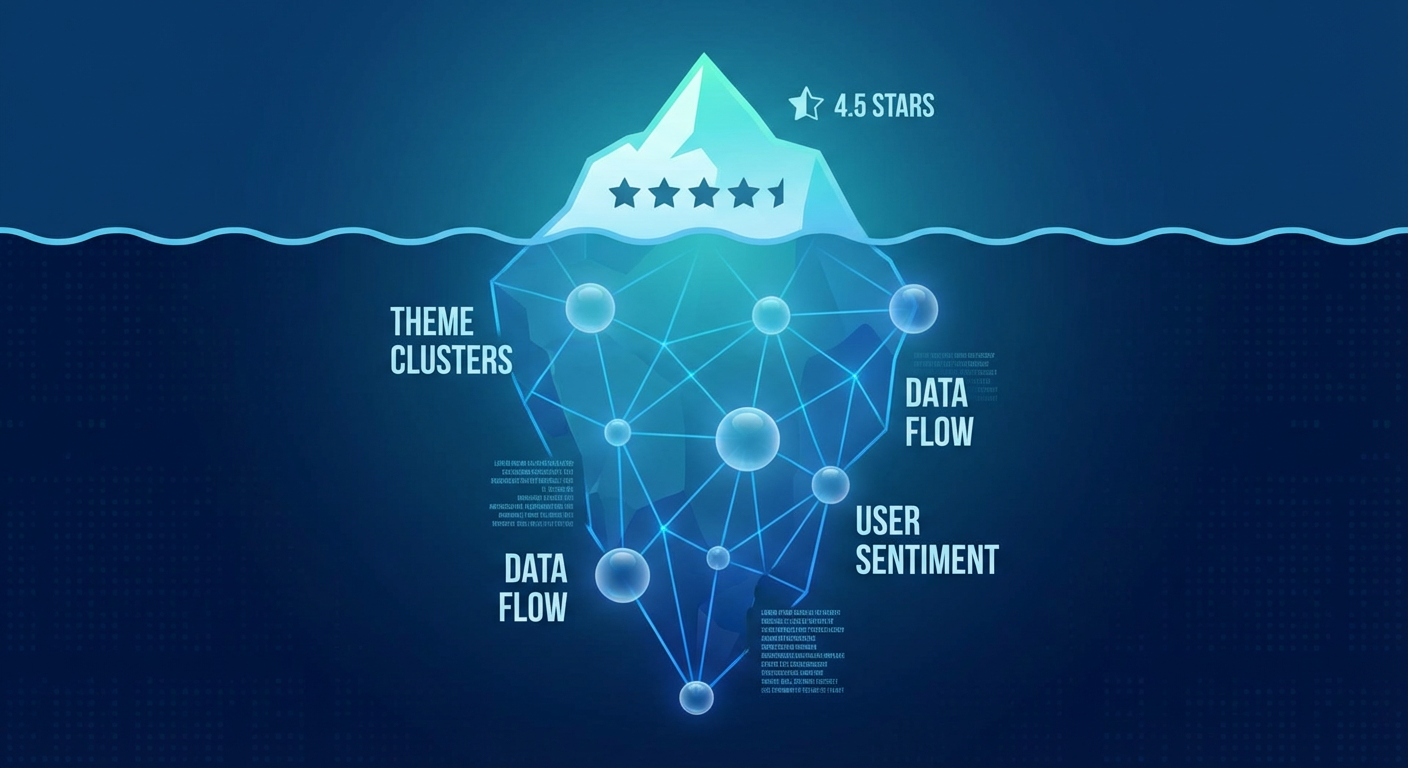

The Review Iceberg

Think of every review as an iceberg. The star rating is the tiny visible tip. Below the waterline is the actual intelligence: specific feature complaints, workflow descriptions, competitor comparisons, emotional language about support experiences, and offhand comments about why they almost switched.

One five-star Trustpilot review that says "finally replaced our old system after the API kept breaking" tells you more about that customer's buying trigger than any intent data platform ever will. One three-star Google Maps review that says "great product but the onboarding took our team three weeks" is a gift-wrapped roadmap for your customer success team.

The problem is scale. A SaaS company with a decent market presence might have 200 reviews on G2, 150 on Trustpilot, and another 80 on Google Maps. Reading all of them individually would take someone a full day. Doing that for three competitors? That's a full week. Nobody has the bandwidth, so teams default to skimming the first page and calling it research.

This is where review sentiment analysis stops being an academic exercise and starts being a genuine competitive weapon. When you can process hundreds of reviews and extract the patterns — not individual opinions, but the recurring themes across dozens of people — you're operating with intelligence that your competitors don't have because they're still staring at star ratings.

What Themes Actually Look Like

When you run AI-powered customer sentiment analysis across a large set of reviews, you stop seeing individual complaints and start seeing structural patterns. These patterns fall into roughly three buckets.

The first bucket is "love it but" themes. These are the things customers genuinely appreciate, paired with the friction that almost made them leave. "The analytics are incredible but reporting takes forever to load." If you see that complaint in fifteen different reviews, that's not a bug report. That's a retention risk hiding in plain sight. Your product team needs to see this yesterday.

The second bucket is "switched from" themes. These are pure gold for positioning. When reviewers mention what they used before and why they moved, they're giving you a migration playbook. If forty people mention switching from Competitor X because of pricing, and your messaging doesn't address that migration path, you're leaving deals on the table.

The third bucket is "dealbreaker" themes. These show up most clearly in one and two-star reviews. People don't leave long negative reviews for fun. They do it because they're genuinely frustrated about a specific thing that mattered to them. When you see "no SSO" appear in eight different reviews, that's a feature gate that's costing you enterprise deals. When "terrible mobile experience" pops up repeatedly, that's a whole market segment you're losing.

No dashboard or NPS survey surfaces these patterns at this level of specificity. Only the raw text of the reviews does. You just need a way to read all of them at once.

Your Competitors' Reviews Are Even More Valuable

Here's the part that most teams miss entirely. Your own reviews tell you what to fix. Your competitors' reviews tell you how to win.

When you run sentiment analysis on a competitor's Trustpilot page, you're not being nosy. You're extracting the exact language their unhappy customers use to describe their frustrations. That language becomes your ad copy. Those frustrations become your feature comparison page. Their recurring complaints become your sales team's objection-handling ammunition.

I've seen teams pull a competitor's G2 reviews and find that 30% of negative reviews mentioned the same issue — painful data migration. They built a landing page specifically about painless migration, ran ads targeting that competitor's brand keywords, and saw conversion rates double versus their generic campaign. They didn't guess what the market wanted. They read it in their competitor's own review section.

This is why competitor review analysis and competitor pain point research shouldn't be a quarterly exercise. It should be something you do every time you launch a campaign or update your positioning.

Why Manual Analysis Breaks Down

The reason most teams still rely on vibes instead of actual review data comes down to logistics. Reviews live in at least three or four different places. Trustpilot has one format. G2 has another. Google Maps reviews are structured differently again. App Store reviews are their own thing. And none of these platforms talk to each other.

To do proper review sentiment analysis, you'd need to pull data from each platform, normalize it into a comparable format, categorize the themes, score the sentiment, and then cross-reference the patterns. That's a data engineering project, not a marketing afternoon.

This is exactly the kind of work that AI agents were built for. An agent can hit Trustpilot, Google Maps, and G2 in a single pass, pull the actual review text, and deliver a structured analysis. Not just "overall sentiment is 72% positive" but "the top three negative themes are onboarding complexity, pricing transparency, and mobile app performance, with onboarding mentioned 4x more frequently than any other issue."

That specificity is the difference between a sentiment score that ends up in a slide deck and an insight that ends up changing your roadmap.

The Sentiment Trend Nobody Watches

There's one more thing that most teams miss: sentiment drift over time.

A product that had overwhelmingly positive reviews eighteen months ago might be trending negative now. Maybe a competitor launched a feature that raised the bar. Maybe a recent update broke something that used to work well. Maybe the market's expectations shifted and what was "good enough" last year now feels dated.

If you're only checking reviews when it's time to update your battlecard, you're seeing a snapshot when you need a trendline. The teams that win are the ones watching sentiment shift in near real-time — catching the drift before it becomes a crisis.

When three new reviews in a week all mention the same frustration that wasn't there before, that's an early warning signal. You don't need to wait for your NPS score to drop next quarter to know something changed. The reviews are telling you right now.

The "So What?"

Review sentiment analysis isn't a nice-to-have analytics exercise. It's the fastest way to understand what your market actually thinks — about you and about your competitors — using words that real customers chose to write without being prompted by a survey.

Stop looking at star ratings. Start reading the text. And if you don't have time to read hundreds of reviews across four platforms — which you don't — let an AI agent do the reading and give you the patterns that matter.

Your customers are already telling you exactly what they want. The question is whether you're listening in the right places.

Try These Agents

- Sentiment Analysis Tool — Analyze sentiment across Trustpilot, Google Maps, Twitter, Reddit, TikTok, and Instagram in one pass

- Competitor Review Analysis — Extract positioning opportunities from competitor reviews on Trustpilot

- Competitor Pain Points — Research competitor weaknesses from reviews and employee feedback

- Google Maps Review Analyzer — Extract sentiment and themes from Google Maps reviews