The "Cheaper" Model Cost Me the Same as the Expensive One

I ran a SQL benchmark on Claude 4.5 Sonnet and Opus. Sonnet is supposed to be the budget option. It ended up costing almost exactly the same.

What I Tested

I wanted to see if an AI agent could actually write SQL like a human analyst. So I gave both models access to five SaaS tables (companies, users, subscriptions, feature usage, invoices) and asked three questions:

- How many companies are in each industry?

- Which companies have overdue invoices over $1,000?

- Find companies with 2+ consecutive months of declining usage.

That last one needs window functions and joins. It's not trivial.

Both Sonnet and Opus got all three right. They were the only models that did. But then I looked at the bill.

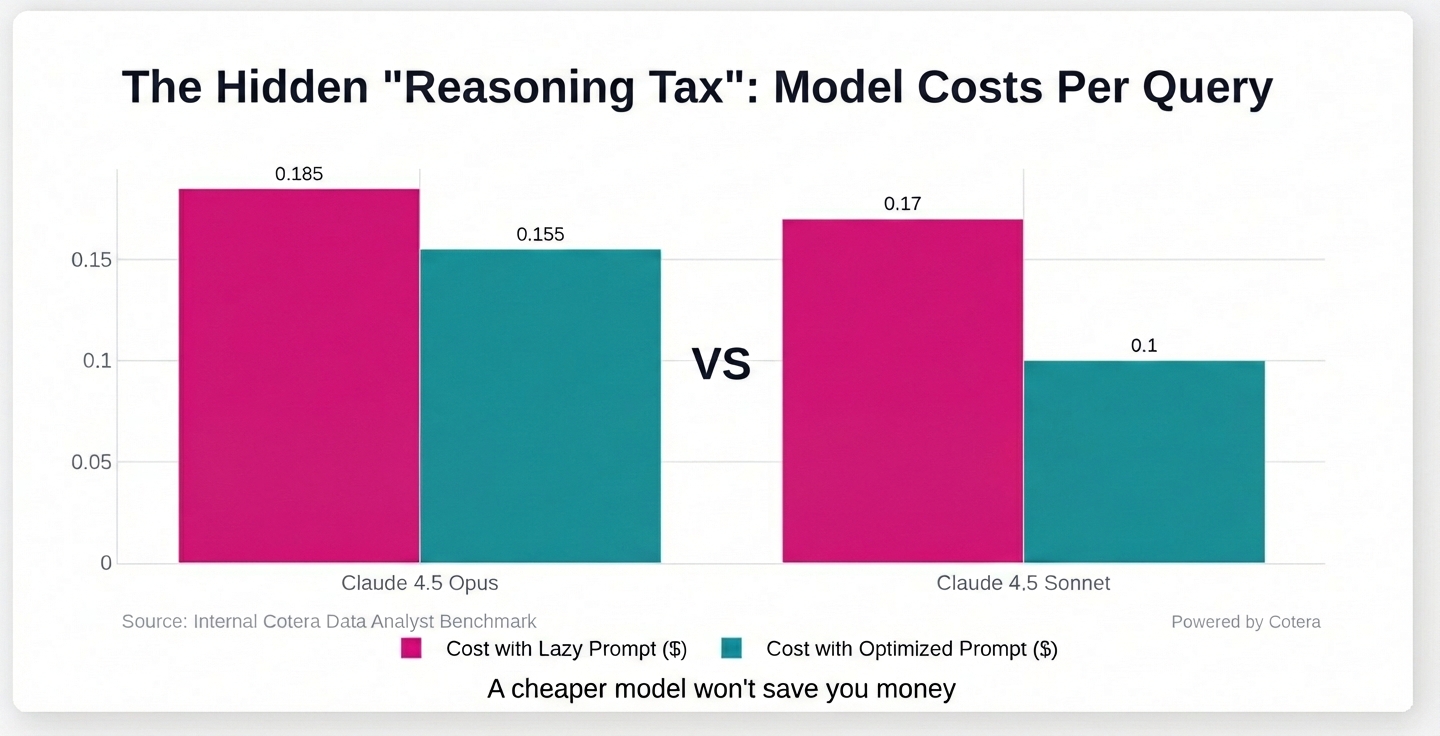

The Bill

Sonnet costs about 2/3 what Opus costs per token. So I expected the final number to reflect that.

Nope.

- Opus: $0.185

- Sonnet: $0.17

Basically the same.

What happened: my prompt was lazy. I just said "look at these tables and give me the SQL." Sonnet had to do a lot more work to figure out what I meant. It queried the schema, thought about the relationships, realized it was missing context, queried again, then finally wrote the SQL.

Opus just... got it faster. Fewer round trips.

Sonnet used almost twice as many tokens to reach the same answer. So yeah, the rate was cheaper, but I paid double on volume.

The Fix

I added one sentence to the prompt:

"Make a query using the dataset tool to ingest 50 sample rows from each table, and match the names of the headers."

That's it.

- Opus dropped by 3 cents.

- Sonnet dropped by 7 cents.

Sonnet went from $0.17 to about $0.10 per run. Now it's actually cheaper.

The sample data gave the model enough context upfront that it didn't have to guess. No hallucinated column names, no extra describe queries.

The Chart

Opus improved a little. Sonnet improved a lot.

What I Took Away

Sonnet isn't bad. It was one of only two models that could even do the hard SQL problem. The Gemini Flash models didn't come close (though Gemini Nano was surprisingly good on the easy ones for almost nothing).

But if you throw a vague prompt at a cheaper model and expect it to just figure things out, you're going to burn tokens on reasoning that a better prompt would've made unnecessary.

Price per token isn't the number that matters. Price per correct answer is.

We help companies figure out which of their AI workflows are actually efficient. If you want to see where you're spending, hit me up.

The Original Prompt

Here's the exact prompt I used for the benchmark:

Benchmark - Write a SQL Query

Intro:

Your job is to look at the tables that I give you and provide me sql queries

that give answers to what I am asking you to pull data for - please give me

the answers in standard SQL, as this is very important - none of these should

use specific functions.

The tables you have access to:

You have access to 5 tables from CloudMetrics, a B2B SaaS analytics platform:

- Benchmark - Companies - Customer accounts (aka "companies")

- Benchmark - Users - Individual users within companies (aka "users")

- Benchmark - Subscriptions - Subscription records (includes historical

upgrades/churns) (aka "subscriptions")

- Benchmark - Feature Usage - Daily feature usage logs (aka "feature_usage")

- Benchmark - Invoices - Monthly billing records (aka "invoices")

You will take the query I give you and come up with the simplest

snowflake-compatible sql response you can.

Make SURE that you use the "aka" table names in the query that you sent me.

Instead of FROM "Benchmark - Companies" it's actually FROM companies

Tools you have access to:

- @dataset/query/execute - please use this to understand the data that is

present in the tables to make sure that you choose the right tables to answer.

- @modal/request - you must submit your answers to this curl endpoint I give you.